Concepts covered in this article: Business Intelligence, Four Broad Disciplines

Whenever I answer the question “what do you do for work?”, the next question I typically get is “what’s business intelligence?” Since those two questions are the very same ones that started me on my journey in this field, I thought it’d be an appropriate place to start this series of articles.

Business intelligence is about having the right information at the right time in order to make informed decisions for your business. The form this information takes can vary widely – ranging from a piece of paper you carry in your pocket, to bank statements, all the way to a set of digital reports that update automatically and answer all sorts of questions. Business intelligence (or, as I’ll refer to it often here, ‘BI’) doesn’t have to apply just to business either; the same tools used by retailers and manufacturers can be used to support public health decisions, research projects, nonprofit causes, or personal goals.

The above definition is what the users experience, and what we at Source to Share work to provide. However, there’s a whole world going on underneath the final reports that the user doesn’t see. That world can be separated into 4 broad disciplines that progress naturally from one to the next.

Four Broad Disciplines of Business Intelligence

- Data Prep

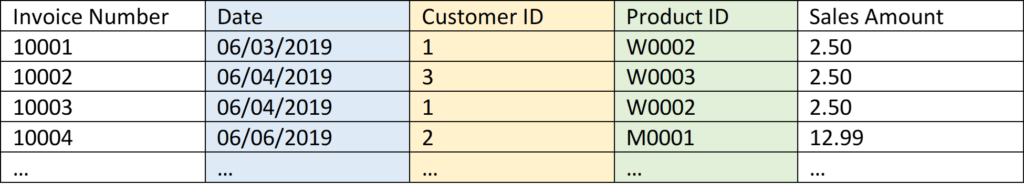

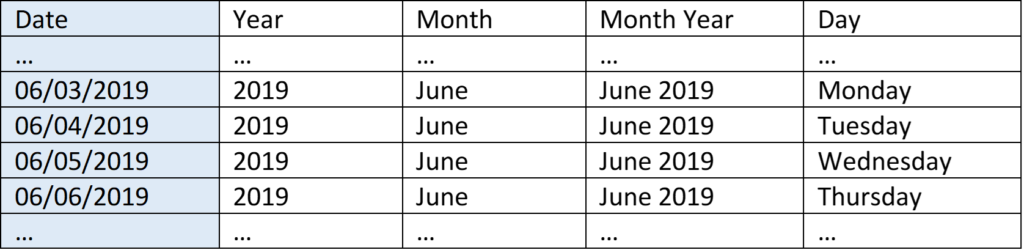

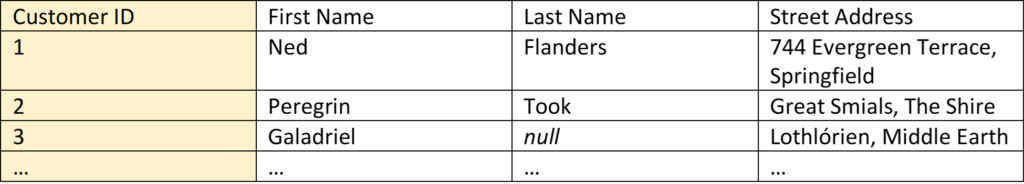

This is usually the first step in any sort of BI process. Data prep usually starts with literally finding the data (it isn’t always in one place), then looking at it to make sure it’s valid. To prepare the data for modeling, oftentimes it will be changed from one format to another, and tables will be combined, split apart, and/or cleaned up. Then the whole slew will be loaded into reporting software.

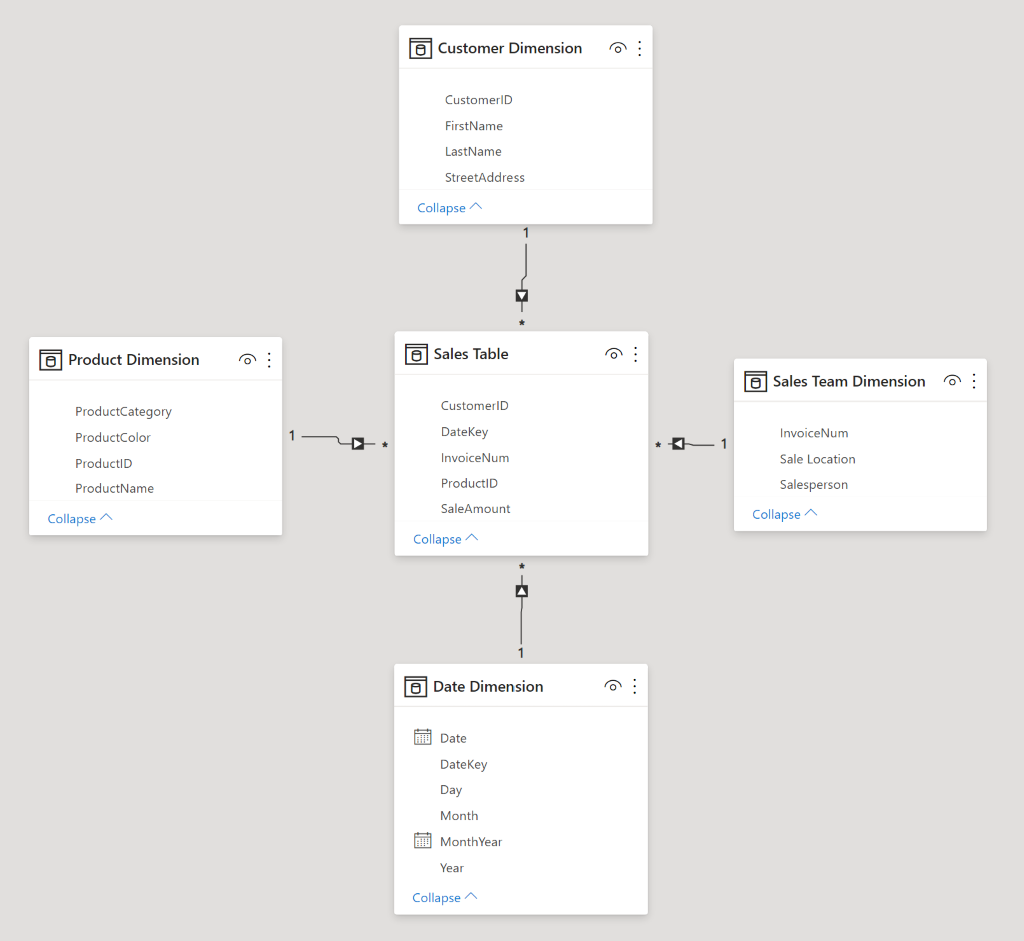

- Data Modeling

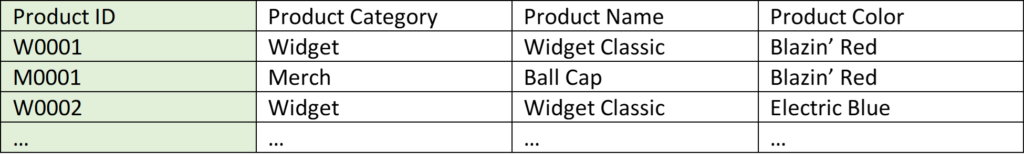

This is the practice of organizing data into meaningful groupings (customers, products, sales, etc.) and defining relationships between them. The modeling we engage in is called ‘semantic modeling’, and it enables users to easily locate data (or attributes) and navigate their relationships.

- Data Measurement

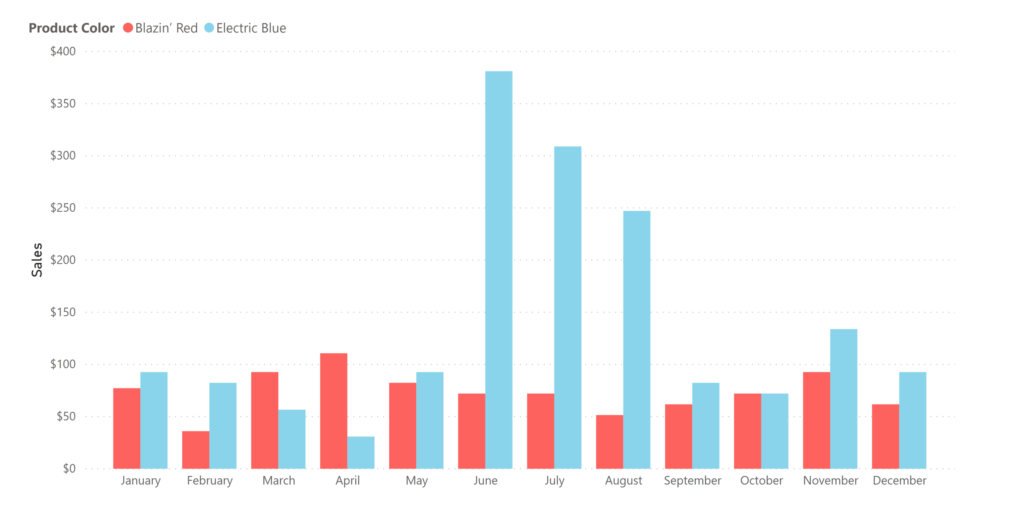

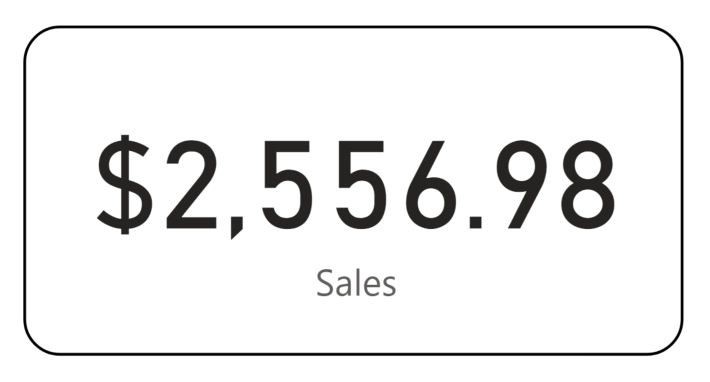

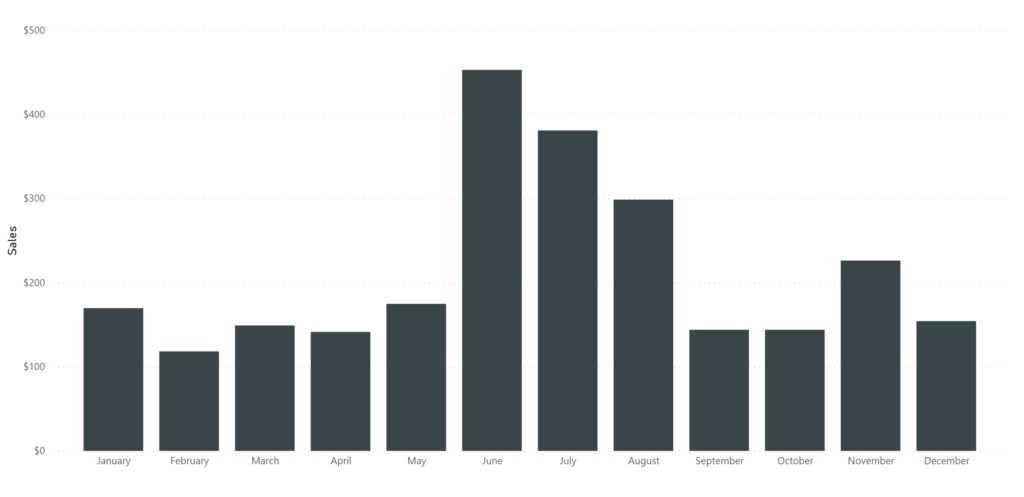

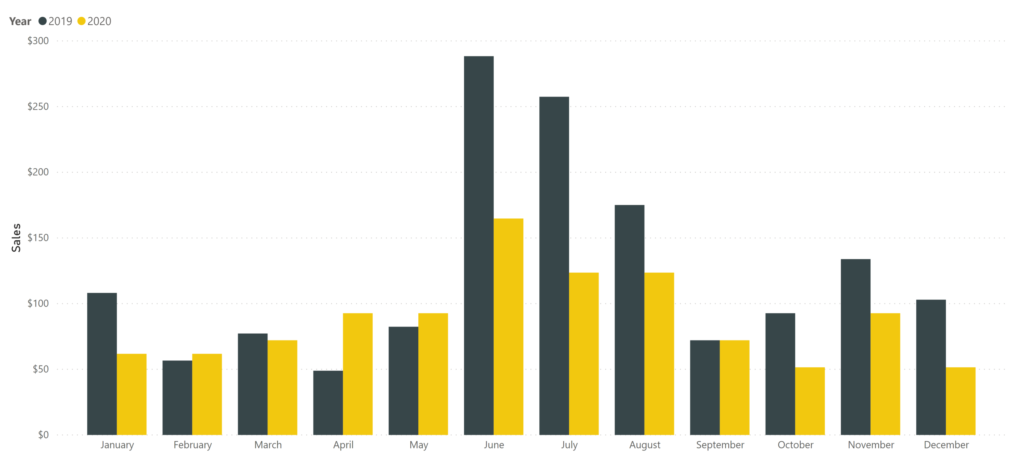

This is the practice of overlaying computations on the dataset. This could be as simple as summing up sales so we can know just how many widgets we’ve sold (and comparing that to our widget budget), or as complicated as forecasting how many widgets we expect to sell next spring.

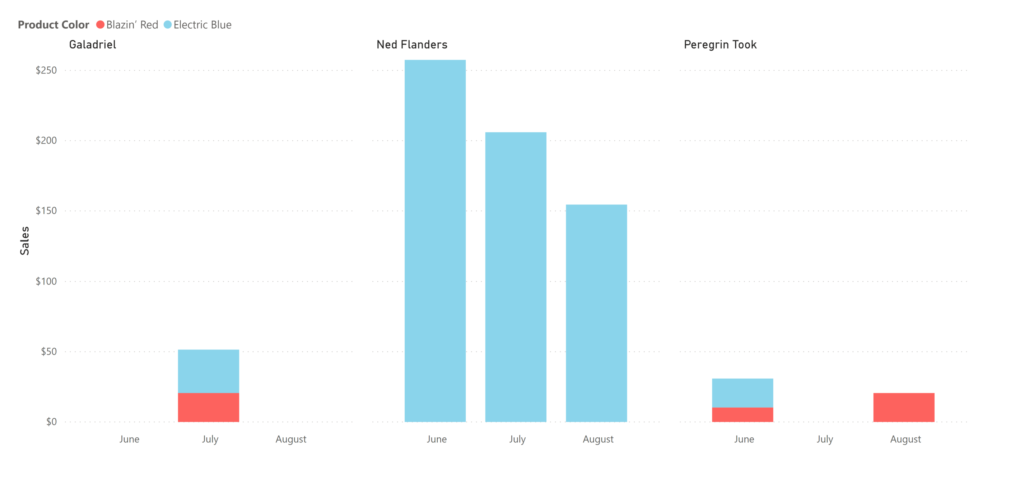

- Data Visualization

This is an often-overlooked discipline in BI, but VERY important. Not all visualizations are equally useful, and some, like the common pie chart, are as overused as they are ineffective at conveying information accurately. The reports created in this step are what’s used to drive decision-making, and there is both a science and an art to creating useful, beautiful reports.

It’s important to note that during a BI project, we’ll often jump around from one discipline to the next. After some basic data prep and data modelling, we might start mocking up some crude visualizations. As we run into challenges creating reports, we’ll work to solve them at whatever stage is best. Often it is much more effective to solve problems upstream, which is the topic of an entire article later in this series.

I created this article and the rest of the series as I tried to answer the question ‘what is Business Intelligence?’ in greater and greater depth. They’re designed as a learning tool for myself and others – a way to document my learning and create a space for the rest of the crew at Source to Share to give feedback and fill gaps in my knowledge.

Who am I anyway?

The titular question of this post is what started my journey with business intelligence. I was at a party, and I asked my future coworker Bo what he did for work. He said business intelligence, and I bet you can guess my next question of him. I was drawn in. Several months later I was asking the Source to Share crew if they needed any extra help.

Before I got involved in BI, I had been running operations at an arts nonprofit. During my time there I learned bookkeeping, accounting, finance – I even filed their taxes. My education and experience had been what I’d describe as BI-adjacent. In school I studied psychology, and I focused on study design and quantitative methodology – the how and why of doing statistical analysis. I had always been intensely interested in data, data visualization, and how common errors in either could lead to misinterpretations with often serious consequences.

When I started at Source to Share, I was brought on to learn the BI ropes and help with the increased demand they were experiencing – and then COVID-19 hit. I shifted from learning while looking over the shoulders of our Senior Analysts to learning from afar. We discussed me documenting my BI education as a learning tool, and thus this series was born.

Another facet of my life that’s important to mention – I’m a licensed counselor in Washington State. My training in communication and emotional literacy help me connect with clients both in the counselor’s office and in the BI office, and those skills serve as a scaffold for these articles.

A Note About How Best to Use These Articles

These articles were born out of our collective desire at Source to Share to create learning resources that contribute to the BI community. They track my learning adventure, documenting the topics that were foundational to my growth as an analyst. They provide a sequential pathway to grasping the ‘big picture’ of the field of business intelligence. You can zoom out and take a look at the map here.

It’s important to note that I didn’t learn BI on my own. Self-directed study was a big part of my journey, but throughout the entire process I was aided by my coworkers Bo and Derek. Both are deep wells of knowledge and experience, and I often call them (remember, COVID-19) at all semi-reasonable hours of the day with questions and to check my understanding. These articles are written to reflect that. I cover topics to the best of my knowledge, while Bo and Derek add important technical detail, nuanced corrections, and personal flair. We’ll make sure to highlight who’s talking with text boxes.

Each article starts with three very important lines of text:

- The Discipline of Power BI this article (roughly) falls under

The disciplines (from above) are Data Prep, Data Modeling, Data Measurement, and Data Visualization. Some articles will span multiple disciplines – in practice they overlap constantly. - Concepts to know before reading this article

The concepts that make up the business intelligence world build on one another. Once we’ve covered a topic with an article, we’ll assume understanding of that topic in future articles. If you see terms here that you aren’t familiar with, go back and read the related articles. The learning map can help you find the article you’re looking for. - Concepts covered in this article

This line lists which new topics we’ll be covering. I encourage you to organize the notes you take around these headers. Speaking of which, I encourage you to take notes (and I’ll be reminding you to do so throughout).

At the end of each article I’ll Share a list of Sources (see what I did there?) that helped me learn so that you can engage in your own further research. Of course, becoming a professional in the field requires additional study and practice. However, it’s my hope that anyone who really digests these articles will gain a deep understanding of the vocation and carry with them a logic that they can use to reason their way through any BI challenge they face.

I also strongly encourage you to take it slow. These articles weren’t written in a day, and they weren’t designed to be consumed in an afternoon, or even a week. Gaining confidence with business intelligence concepts takes consistent, measured effort. Give yourself time to write notes, integrate what you’ve learned, take stretch breaks, and even share what you’ve learned with others. Bon voyage!

Sign up to receive email notifications when we release new learning content!

Sources

- Derek Rickard: Founder, Consultant – Source to Share

- Bo Stewart: Analyst, Consultant – Source to Share